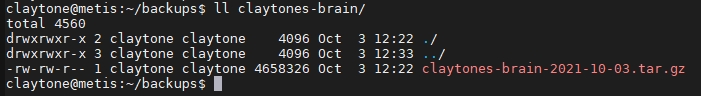

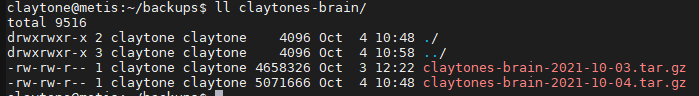

In yesterday’s post I created a crontab that saves a backup copy of my Obsidian vault to my server every night. This morning, however, I was met with this:

That column between username and date is the size of the file in bytes. Pretty odd that yesterday’s notes would be ~5mb, but today’s is only 45 bytes…

Notice also that the owner of today’s file is root, not claytone.

So, looks like we have some work to do.

Solving the ownership issue is simple; during my creation of the crontab yesterday, I used sudo crontab -e as instructed by Stack Overflow. However, this creates a crontab for root and not my user. This was a simple enough fix; I just had to run sudo crontab -e again and comment out the line from yesterday. Then, I set up a new job using crontab -e without sudo so that claytone would be creating all of the backups.

Troubleshooting the rest was a bit trickier.

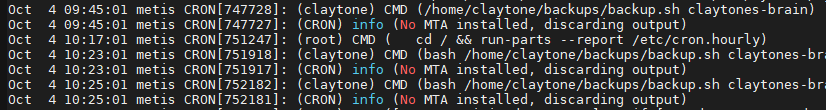

When crontab jobs run, they send a copy of the output to the user’s mailbox on the server itself. However, I didn’t even have a mail server set up, so the output was discarded. I discovered this using cat /var/log/syslog | grep CRON | less

Oddly enough, I couldn’t even redirect the output of the cron job to a log file. It had to go through mail.

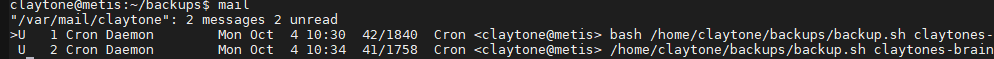

I did some googling and installed postfix as a mail sending service, and mailutils as a reading service. I was then able to view my cron logs by checking my inbox with the command mail.

Aha! When I moved the gdrive binary to /usr/local/bin, I didn’t realize the crontabs wouldn’t be able to see it on my PATH. I updated the script to include the full path to the gdrive command:

set -x

if [ "$#" -ne 1 ]; then

echo "Invalid args. Please pass a file name to download."

exit 1

fi

gdrive_loc='/usr/local/bin'

file_name=$1

file_loc='/home/claytone/backups/'$file_name

sed_expression='s/\(.*\)\s.*'$file_name'.*/\1/'

file_id=$($gdrive_loc/gdrive list --query "name contains '$file_name'" | grep $1 | sed -e $sed_expression )

$gdrive_loc/gdrive download --path $file_loc --recursive -f $file_id

tar -czf $file_loc/$file_name-$(date +'%Y-%m-%d').tar.gz $file_loc/$file_name

# Remove non-compressed version

rm -r $file_loc/$file_name

# If there are more than 10 days of history, remove the oldest file in the dir

if [ "$(ls -l $file_loc | wc -l)" -gt 10 ]; then

oldest_file="$file_loc/$(ls -t $file_loc | tail -1)"

echo "Removing $oldest_file"

rm -r $oldest_file

fi

This time I tested the crontab by setting it to run very shortly after I closed the file. And presto, now it appears to work!

Happy debugging, y’all.