The Problem

I recently started using Obsidian to bring some regulation to my large number of poorly organized notes. Of course, using multiple devices, I need to keep my vault in sync at all times. While you can pay for their proprietary cloud sync services, I’ve actually opted to create my own.

I wanted to create a backup system on Metis (my home server) in case I ever overwrite my Obsidian vault on Google Drive. This kind of thing has happened before with an encrypted password vault dropping a bunch of keys (rather than merging changes) when I make an edit from a separate place.

This will be a one-way sync that pulls down the vault from Google drive every night and archives it, leaving a copy that can’t be overwritten by the sync service.

I also want it to be generalized, so that I can make continuous backups of other files that frequently change on my Google Drive.

Setup

The first step is installing the Gdrive utility for Unix. This will allow me to interact with Google Drive from the command line.

I grabbed the gdrive_2.1.1_linux_386.tar.gz archive from their Releases page, unpacked it, and moved it to /usr/local/bin for execution. Don’t forget to reset your session.

claytone@metis:~$ wget https://github.com/prasmussen/gdrive/releases/download/2.1.1/gdrive_2.1.1_linux_386.tar.gz

claytone@metis:~$ tar -xvf gdrive_2.1.1_linux_386.tar.gz

claytone@metis:~$ sudo mv gdrive /usr/local/bin

claytone@metis:~$ source ~/.bashrc

claytone@metis:~$ ./gdrive list

Scripting

Setting up a bash script to pull the file from Drive is pretty easy.

However, I don’t want to keep tons of unnecessary copies over time. Ideally if I really mess up the sync, I should notice within 10 days. So, I’ll just drop the oldest file every day after the first 10 days.

Note that there is a bug in this script! Check out this post for the corrected version!

set -x

if [ "$#" -ne 1 ]; then

echo "Invalid args. Please pass a file name to download."

exit 1

fi

file_name=$1

file_loc='/home/claytone/backups/'$file_name

sed_expression='s/\(.*\)\s.*'$file_name'.*/\1/'

# Get file ID from searching gdrive

# TODO: if file not found, quit

file_id=$(gdrive list --query "name contains '$file_name'" | grep $1 | sed -e $sed_expression )

# Download and compress file

gdrive download --path $file_loc --recursive -f $file_id

tar -czf $file_loc/$file_name-$(date +'%Y-%m-%d').tar.gz $file_loc/$file_name

# Remove non-compressed version

rm -r $file_loc/$file_name

# If there are more than 10 days of history, remove the oldest file in the dir

if [ "$(ls -l $file_loc | wc -l)" -gt 10 ]; then

oldest_file="$file_loc/$(ls -t $file_loc | tail -1)"

echo "Removing $oldest_file"

rm -r $oldest_file

fi

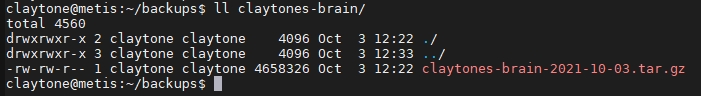

It appears to work!

Automation

You can create a repeating crontab job that runs at a certain time. In my case, I want the backups to trigger at 3 every morning. Run

sudo crontab -e

Note that using sudo will cause files created by your job to be owned by root! Don’t use sudo if you don’t want that!

Then add:

0 3 * * * /home/claytone/backups/backup.sh claytones-brain

This will invoke the executable backup.sh script at 3am every day.

Future work

- Error checking/handling

- What if a query returns multiple file names?

- What if a query returns nothing?

- Help page